Lots of LEDs? It’s not Christmas yet!

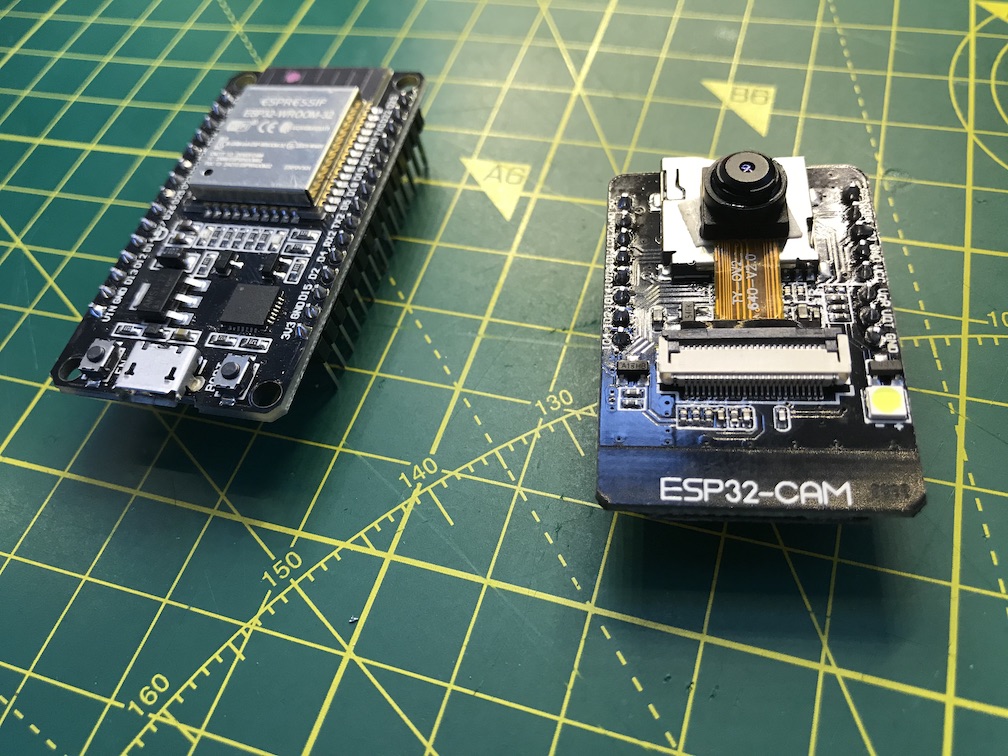

I had a big bundle of addressable WS2811 LED strings and an ESP-CAM board (an ESP32 dev board with a camera). There’s only one possible project that you can do with these components. Turn the disorganised chaos of lights into something a bit more organised.

As an added bonus I’ve ended up duplicating the image processing code in JavaScript so you don’t even need a camera on your ESP32 board - you can just use a plain dev board to drive the LEDs.

You can see the results of my efforts in the video below and I’ll run through a bit more detail of the code in the following text.

The full sample code can be found here: https://github.com/atomic14/self-organising-leds

If you want to do this yourself then you will need an ESP32 dev board of some kind and of course you’ll need some kind of addressable LEDs. I’m using the FastLED library for driving the LEDs so with some small code changes you can probably support pretty much any addressable LEDs.

Our challenge comes down to a very basic problem, given access to a stream of images from a camera identity the approximate locations of each LED in 2D space. Once you’ve done that it’s a simple problem to map from each LED’s x and y location onto a frame buffer containing the pattern you want to show.

There’s a bunch of boiler plate code to initialise the ESP-CAM - I took inspiration from the sample code here and copied the bits I needed to get the camera up and running.

An important change I’ve made is only capture greyscale images at the lowest framesize:

config.pixel_format = PIXFORMAT_GRAYSCALE;

config.frame_size = FRAMESIZE_QQVGA;

And then to grab a frame from the camera we simply do:

camera_fb_t *fb = esp_camera_fb_get();

Frame *frame = new Frame(fb);

esp_camera_fb_return(fb);

With our Frame class grabbing a copy of the pixels along with the width and the height of the image.

pixels = (uint8_t *)malloc(fb->height * fb->width);

memcpy(pixels, fb->buf, fb->height * fb->width);

width = fb->width;

height = fb->height;

length = fb->width * fb->height;

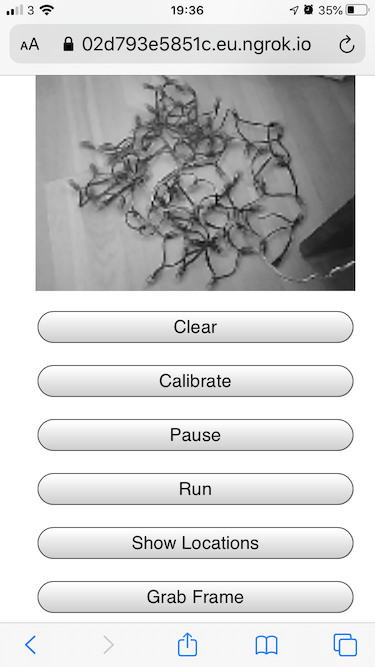

The image below shows a frame grabbed from the ESP-CAM sensor.

For the JavaScript version of this code it’s a bit more complicated. One of the biggest problems is that we need to be running over HTTPS to have access to the camera - more on this later….

const stream = await navigator.mediaDevices.getUserMedia({

video: { facingMode: "environment" },

audio: false,

});

const canPlayListener = () => {

// the video is loaded and we can grab frames

onVideoReady(video);

video.removeEventListener("canplay", canPlayListener);

};

video.addEventListener("canplay", canPlayListener);

video.srcObject = stream;

video.play();

Once we have a video stream coming from the camera we can grab a frame by drawing the video to a canvas context and then getting the imageData from it.

function getVideoFrame(video: HTMLVideoElement, canvas: HTMLCanvasElement) {

const width = video.videoWidth;

const height = video.videoHeight;

const context = canvas.getContext("2d");

// draw the video to the canvas

context!.drawImage(video, 0, 0, width, height);

// get the raw image bytes

const imageData = context!.getImageData(0, 0, width, height);

// convert to greyscale

const bytes = new Uint8Array(width * height);

for (let y = 0; y < height; y++) {

for (let x = 0; x < width; x++) {

const r = imageData.data[(y * width + x) * 4];

const g = imageData.data[(y * width + x) * 4 + 1];

const b = imageData.data[(y * width + x) * 4 + 2];

// https://en.wikipedia.org/wiki/Grayscale#Converting_color_to_grayscale

const grey = Math.min(255, 0.299 * r + 0.587 * g + 0.114 * b);

bytes[y * width + x] = grey;

}

}

return bytes;

}

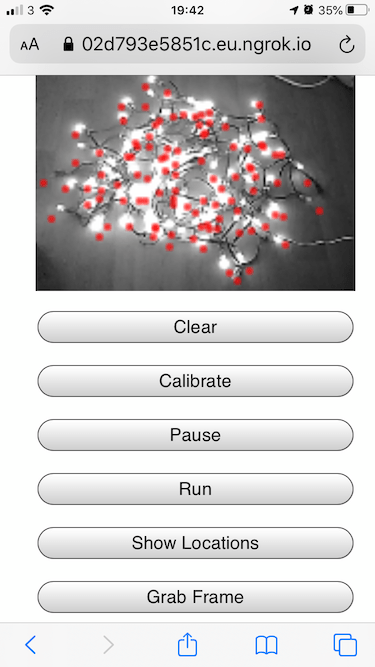

Now we can grab frames we just need to grab a frame with no LEDs lit, light one LED, grab another frame and then compare the two. The difference should tell us where the LED is. To avoid noise or small movements of the camera having a bit impact we apply a guassian blur to the captured frames before taking the difference.

This is a of course a very naive and simple algorithm and could easily be improved on.

In the C++ code of the ESP32 we do all this directly in code. In the JavaScript version we call API functions on the web interface of the ESP32 to turn LEDs on and off and once we’ve finished the processing send up the calculated positions of the LEDs to the board.

In our ESP32 code we create a framebuffer and draw patterns into it. We then use the locations of each LED to work out what color it should be.

To solve the issue of needing HTTPS to access the camera and also needing the API calls to be HTTPS as well (we can’t mix content nowadays!) we need a way of serving both the UI and the API from the ESP32 web server over HTTPS. There are web servers that support HTTPS and self signed certificates available for the ESP32 but this leads to other problems and would require a rewrite of the device code. An easy workaround to this problem is to use a service such as ngrok to provide a secure URL from the cloud through our computer to the ESP32 device. Slightly convoluted, but it works!

This in only needed if you are not using an ESP-CAM and have to use your phone’s camera for calibrating the LEDs. Sign up for a free acount with ngrok and then find the IP address of your ESP32 board:

ping espcam.local

PING espcam.local (10.0.1.17): 56 data bytes

64 bytes from 10.0.1.17: icmp_seq=0 ttl=255 time=14.343 ms

64 bytes from 10.0.1.17: icmp_seq=1 ttl=255 time=6.493 ms

Take the IP-Address and ask ngrok to start proxying requests for us:

ngrok http 10.0.1.17 -inspect=false

You’ll need steady hands - there’s quite a lot of latency going on so the space between turning an LED on and off and grabbing a frame can be quite large. I slightly moved as I was taking the frame to show the locations so the positions are slightly off.

Checkout the video to see how well it works - surprising for such a simple algorithm.