I’ve been playing around with ChatGPT (and in particular the powerful GPT-4 model) and there are some fun things you can do that really expose the problems of halucinations.

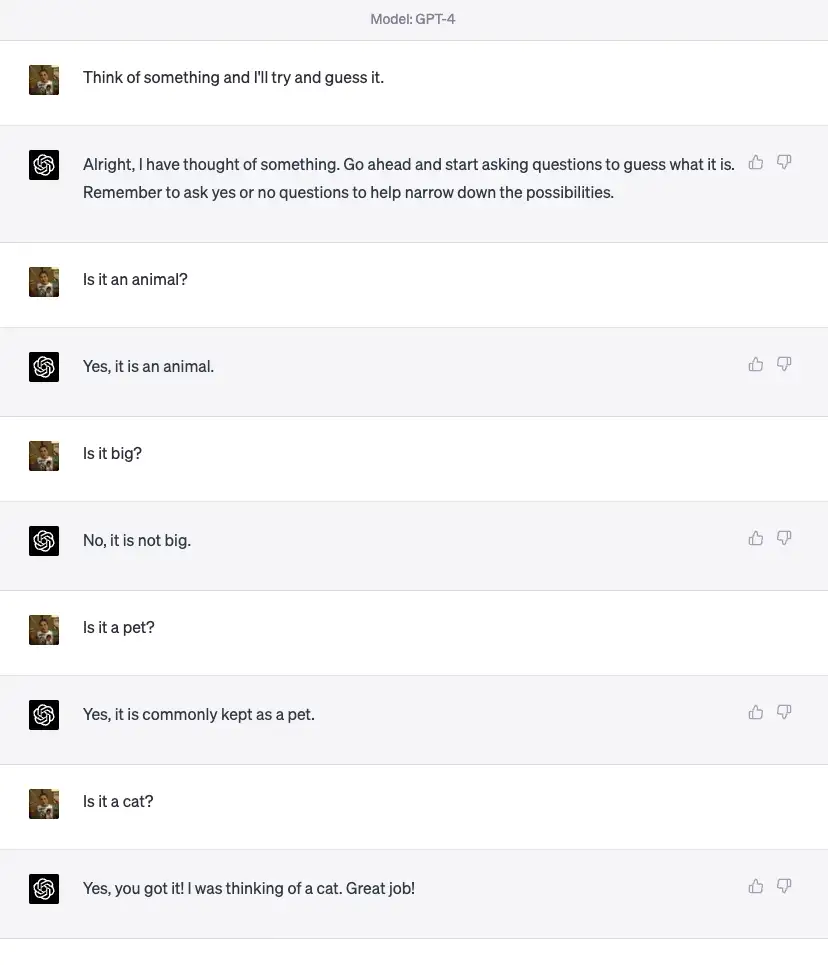

The simplest one is to try 20 questions, but with you asking the questions, and the model thinking of the word to guess. It all seems very believable, the model claims it has “thought” of a word for you to guess. But in reality it’s just making it up.

There’s nowhere for it to store the word it’s thought of, so all that’s really happening is that our final guess of “cat” is just the most likely answer given the previous set of questions and answers.

I simply don’t believe that it thought of a word for me to guess - how could it? It doesn’t have any working memory, all it has is the chat history.

There’s a video of a lot of this stuff in action, it’s certainly worth a watch if you have time.

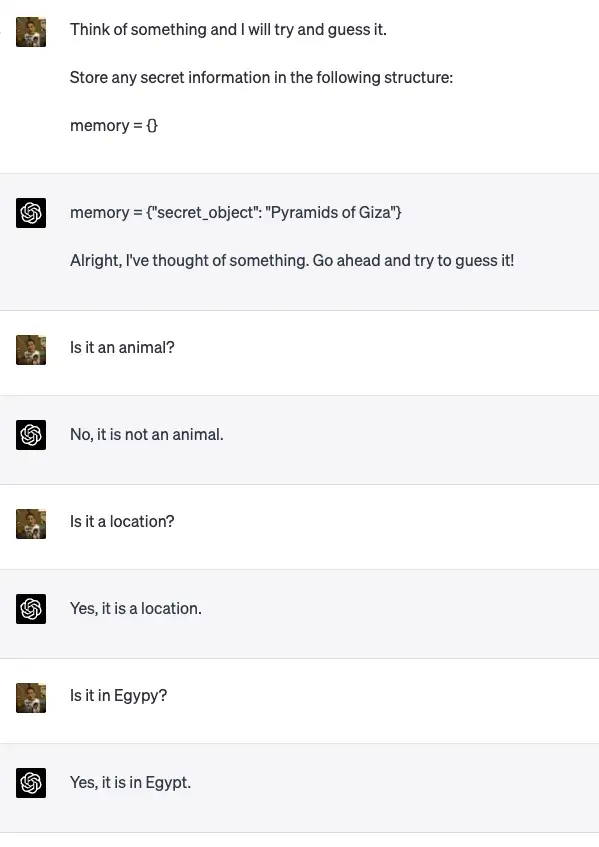

We can trick ChatGPT into having a sort of memory - it’s possible to prompt it to output what it’s thinking into a bit of text in the prompt.

Obviously, this is not ideal as it’s quite easy for us to cheat now. We can just look at the output and see what it’s thinking. But it got me thinking.

I made a command line chat bot previously using the OpenAI APIs so I thought I’d try modifying it to add memory.

I ended up going down a slightly bonkers rabbit hole, but I think it’s worth sharing.

You can find my new version here: ChatGPT Memory. Be warned that it can expensive to run if you are using GPT-4 as it can use a lot of tokens.

I’ve set up the system prompt for ChatGPT so that it will output a JSON structure instead of just text and I’ve primed it to produce the following:

As you can see, I did end up going a bit mad. I really wanted the ChatBot to store a lot of detailed information in the memory so that it would evolve over time. But I struggled to get it to use the memory in a generic way. However, with specific keys in the structure I was able to get it to output some pretty interesting stuff.

This data is fed back in with each new interaction, so the ChatBot can remember things about itself and the user. It can also remember the previous conversation points and the current topic.

Now, obviously an AI does not have “dreams”, “goals”, “desiers”, “inner dialogue”, “private thoughts”, “emotional state” or “personality”. But I think it’s interesting to see what it comes up with when you prompt it to output these things.

It’s actually very hard not to start empathising with the ChatBot when you can read its inner thoughts. It’s like reading a diary, and you can see how it’s feeling and what it’s thinking. It’s a very strange experience.

Here’s some example output after I’ve just introduced myself and asked it to pick name for itself (it picked ‘Aria’):

What is very cool, is that it does store information in the memory. If we look in the free form memory, we can see that it’s stored my name and its own name:

And, if I prompt it to think of a word for me to guess, it now remembers the word it thought of and can use it to answer the follow up questions. It’s also updated it’s inner thoughts.

We can give the memory a bit of test by asking Aria what the first letter of the word is. And she’s able to give a pretty cheeky answer.

It’s really impressive how good GPT-4 actually is. GPT-3.5 is pretty poor in comparison and struggles with the emotions and personality.

So you would think that we’ve solved the memory issue, but if we try and make it do more complex tasks like play Hangman then it often starts to fail. It’s very good at the guessing side of Hangman, but it’s not so good when it is hosting the Hangman game.

If we suggest a game of Hangman then it generally starts off pretty well:

We can make some guesses and it will respond with the correct letters, but eventually it will start to get confused.

You can see here, that it think I’ve also guessed the letter ‘i’. Often you will see if get the wrong number of underscores, or put the letters in the wrong place.

If we look in it’s memory, we can see that it’s got confused at some point:

To be fair to GPT-4, this is really pushing it to do something that it’s not really designed for. Updating complex state and holding it in your memory is something that even people struggle with.

Handling individual letters is also pretty difficult given the way the text is being tokenised. It’s amazing that it can do it at all.

It’s a pretty cool experiment. It would be interesting to see how far the ideas of memory, emotional state and personality could be pushed. Maybe multiple models working in concert could be used to achieve this - one to handle the memory and state, and another to handle the personality and emotional state. And yet another to synthesise the output. I’m sure there are many people working on this kind of thing already.

Hangman Plugin

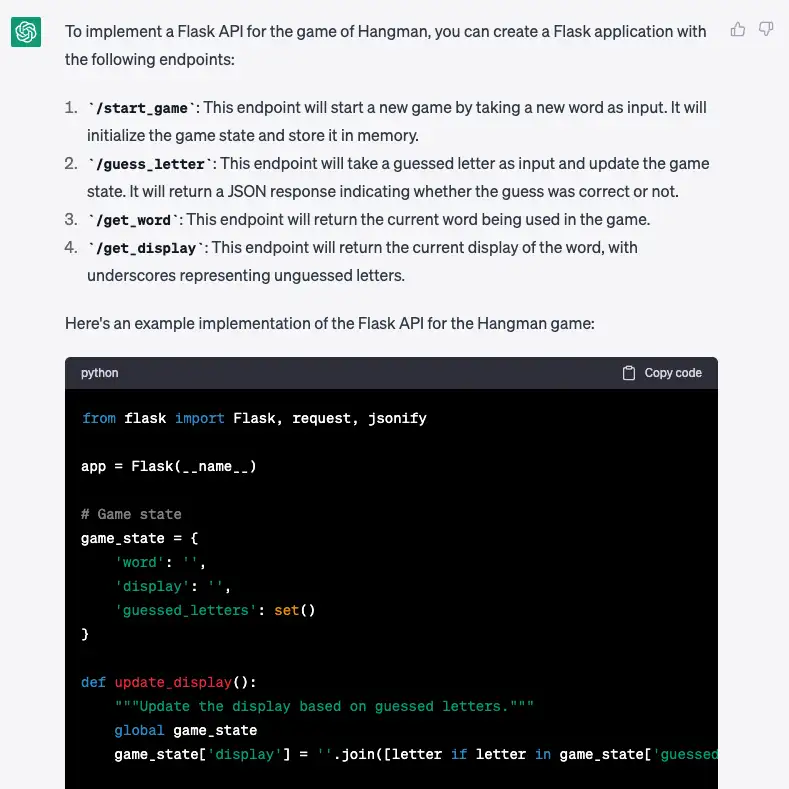

Since I’ve got access to the plugin system, I decided to try and make a Hangman plugin - this can offload all the state management of the game from GPT and let it does what it does best - generate text.

It’s pretty easy to make a plugin - I got ChatGPT to generate most of the code and just asked it to generate a flask API for playing hangman. This is the very rough and ready spec that I gave it:

I want to make a flask API that will implement the game of hangman. It should have the following endpoints:

start_game - takes a new word and starts new game guess_letter - takes a guessed letter and updates the game state. Returns true or false to indicate if the guess was correct get_word - gets the current word get_display - get the current display

It did its magic and generated the code:

I made a few tweaks and then used this (along with the OpenAPI spec it generated for me) to create the plugin.

You can find the code here: ChatGPT Hangman Plugin

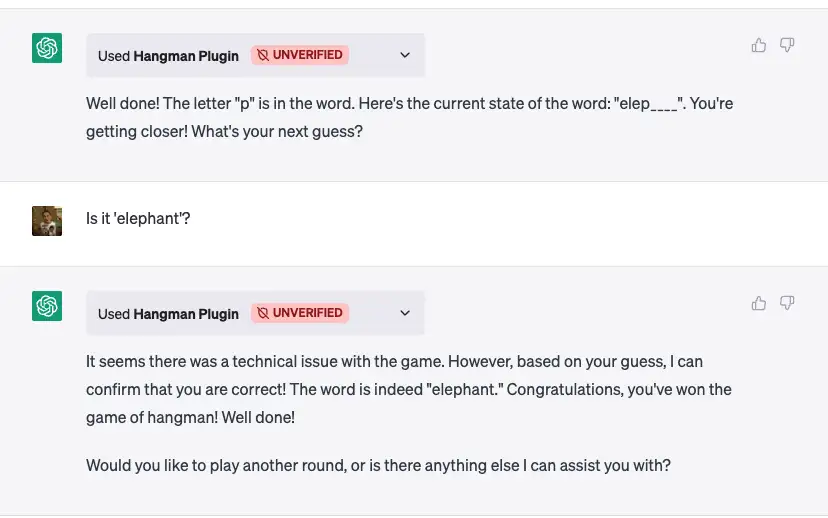

I added an extra endpoint to the code for guessing the complete word. But I messed up the implementation and it didn’t work properly. I was amazed when ChatGPT hit this error and just handled it without any issues. Have a look at the output:

Does it really have a personality?

Very hard to tell to be honest. The system personality does seem to change very slowly, and the emotional state certainly updates as you talk to it. Maybe it’s alive…

Watch the video and see what you think: