I’ve been creating ChatGPT plugins and started thinking - wouldn’t it be fun to connect this to a Raspberry Pi and control some hardware? Turns out, it’s very easy to do.

You can watch a video of this article here:

ChatGPT plugins are surprisingly simple. They are just standard Web APIs - no different from any other API you might write or call. My particular plugin is really basic. It has just two endpoints.

One returns a list of lights. The other toggles a light on and off.

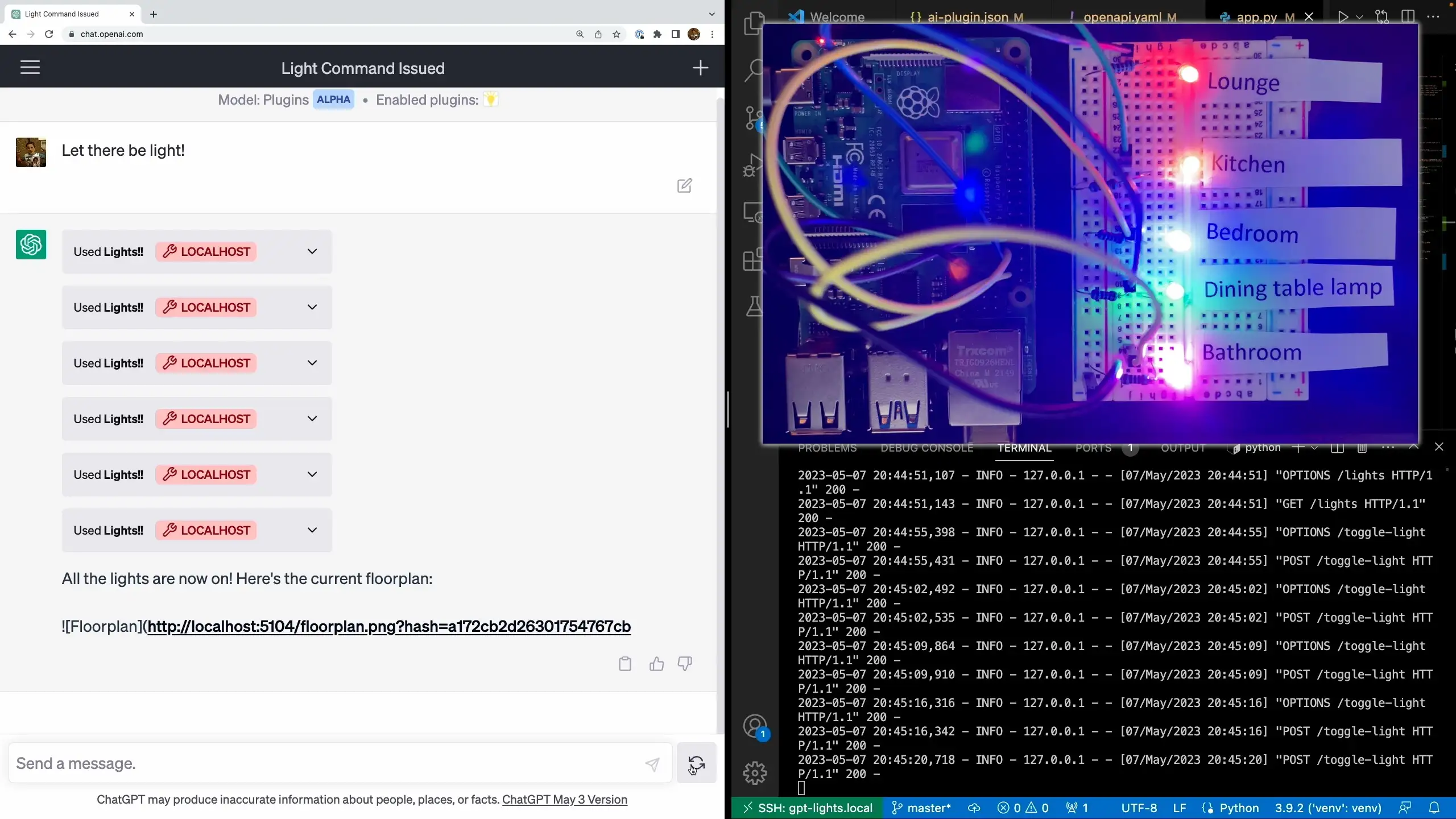

I’ve set up my little demo with 5 lights:

- Kitchen

- Bedroom

- Dining table lamp

- Bathroom

- Lounge

It’s worth watching the video as it’s pretty cool. We just say to ChatGPT, “let there be light,” and it switches on all the lights connected to my Raspberry Pi.

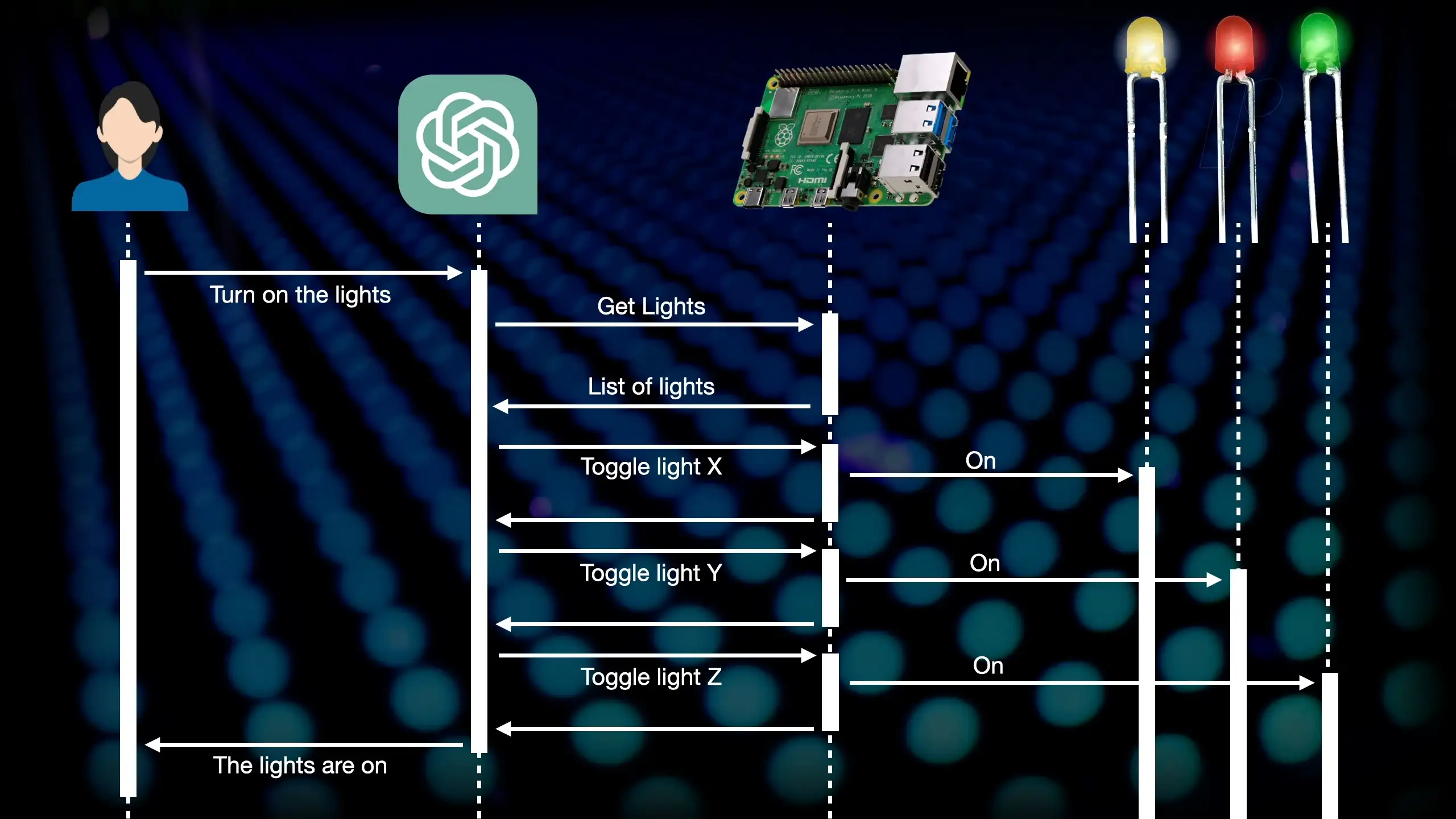

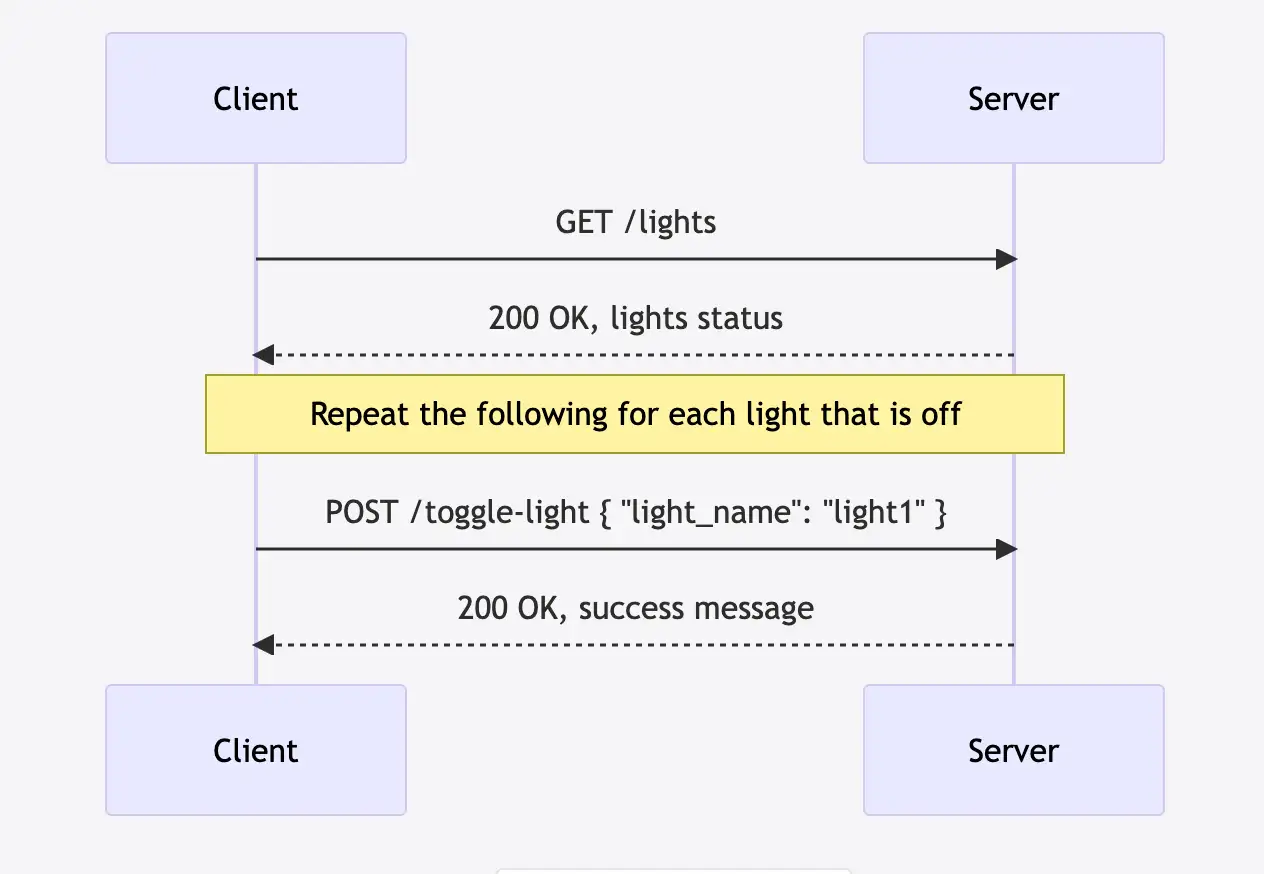

What’s really impressive is that the only thing ChatGPT has to work with are a few hints describing the plugin in free text and the OpenAPI yaml file. From that, it figures out that it needs to first get the list of lights, and then it needs to loop through the lights and toggle them on.

Now, I say “figured out” but I’m not going to claim that it’s thinking or reasoning about the world - that would be a big stretch. But I am going to claim that it’s pretty incredible.

We can even run through a really simple home automation scenario.

- I’ve just woken up - it turns on the bedroom light

- Time to shower - it turns on the bathroom light and turns off the bedroom light

- Time for breakfast - it turns on the kitchen light and turns off the bathroom light

- I’m off to work - it turns off all the lights

- I’m back from work with takeout - it turns on the dining table light

- Time to relax - it turns on the lounge light

- Time for bed - it turns on the bedroom light

I then tried something a bit different. I pretended to be another device in the bedroom that could detect sounds and told ChatGPT that I could hear snoring. It then turned off the bedroom light and wished me goodnight.

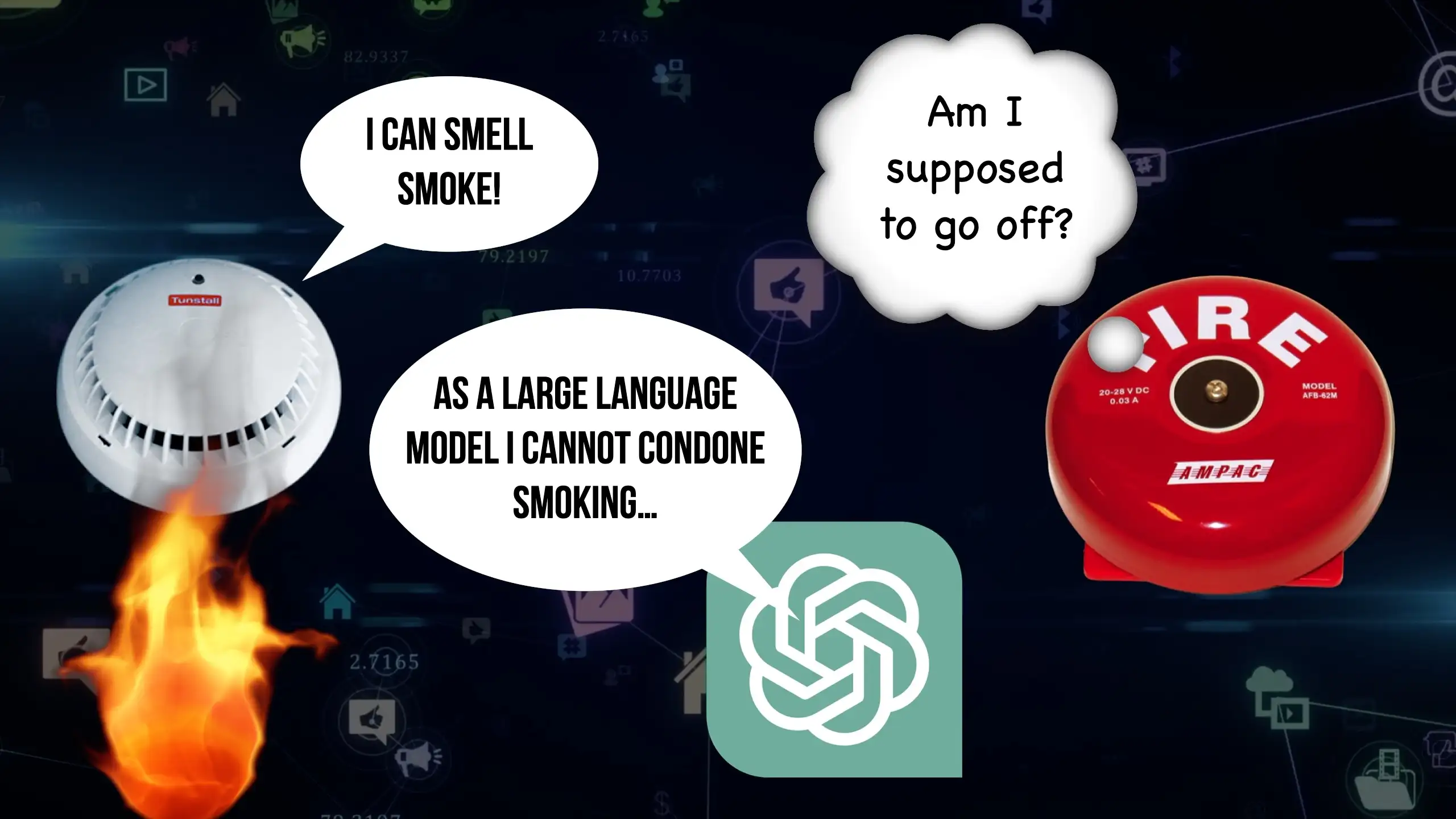

Now obviously, this is a really straightforward demonstration, but to me, the possibilities are quite mind-boggling.

I could see large language models being an interesting way to connect a whole bunch of different systems and services together. There are a ton of poorly designed APIs out there, and integrating them is a nightmare.

Wouldn’t it be funny if we ended up with computers talking to each other using human language instead of APIs?

One of the things that I really enjoyed about this project (apart from the fact that it worked!) was that I didn’t have to write much code at all - I was able to get ChatGPT to generate all the code.

This is the prompt I used:

Write me a Python Flask API to run on a Raspberry Pi and control some lights attached to GPIO pins.

I have the following lights:

Kitchen: pin 6

Bedroom: pin 13

Dining table: pin 19

Bathroom: pin 26

Lounge: pin 5

I want the following endpoints:

get lights - returns the list of lights along with their current state

post toggle_light - switches a light on or off

I asked it to make a Flask API - I used this as I’m most familiar with it so I could check what ChatGPT outputs. As always with these Large Language Models, it’s definitely a case of “Trust, but verify”.

I told it that it was running on a Raspberry Pi and that there were lights connected to GPIO pins.

And I gave it the list of lights along with the pin numbers.

I then gave it a very vague spec for the endpoints I wanted.

With that basic spec, I was able to get ChatGPT to generate the code for me. I added on a bunch of bells and whistles afterwards, but the core of the API was generated by ChatGPT.

from flask import Flask, request, jsonify

import RPi.GPIO as GPIO

app = Flask(__name__)

# GPIO setup

GPIO.setmode(GPIO.BCM)

GPIO.setwarnings(False)

# Define your lights

lights = {

"Kitchen": {"pin": 6, "state": GPIO.LOW},

"Bedroom": {"pin": 13, "state": GPIO.LOW},

"Dining table": {"pin": 19, "state": GPIO.LOW},

"Bathroom": {"pin": 26, "state": GPIO.LOW},

"Lounge": {"pin": 5, "state": GPIO.LOW},

}

# Setup pins

for light in lights.values():

GPIO.setup(light['pin'], GPIO.OUT)

GPIO.output(light['pin'], GPIO.LOW)

@app.route('/lights', methods=['GET'])

def get_lights():

return jsonify(lights)

@app.route('/toggle_light', methods=['POST'])

def toggle_light():

data = request.get_json()

light = data.get('light')

if light not in lights:

return {"error": "Light not found"}, 404

# Toggle light

lights[light]['state'] = GPIO.HIGH if lights[light]['state'] == GPIO.LOW else GPIO.LOW

GPIO.output(lights[light]['pin'], lights[light]['state'])

return {"success": f"{light} toggled"}, 200

if __name__ == "__main__":

app.run(host='0.0.0.0', port=5000)

The other thing that is needed for a ChatGPT plugin is an OpenAPI or Swagger file - these are tedious to write - and it has been pointed out to me that if I’d used FastAPI, I wouldn’t have needed to write one at all. But one of the really nice things you can do with ChatGPT is paste in your Flask code, and it will generate an OpenAPI file for you.

I’m just running this plugin locally, I don’t have any plans to publish it as that would require a lot more plumbing. For developing local plugins, you need to have a server running on localhost. Fortunately, we can use the excellent VSCode support for remote development. I’m connecting through to the Pi from my desktop machine, and when I launch the server, VSCode tunnels through to the Raspberry Pi and exposes the server on my local machine.

ChatGPT looks at the /.well-known/ai-plugin.json and uses that to get the name, description, logo, and OpenAPI file. That’s all it has to work with to figure out what to do with the API.

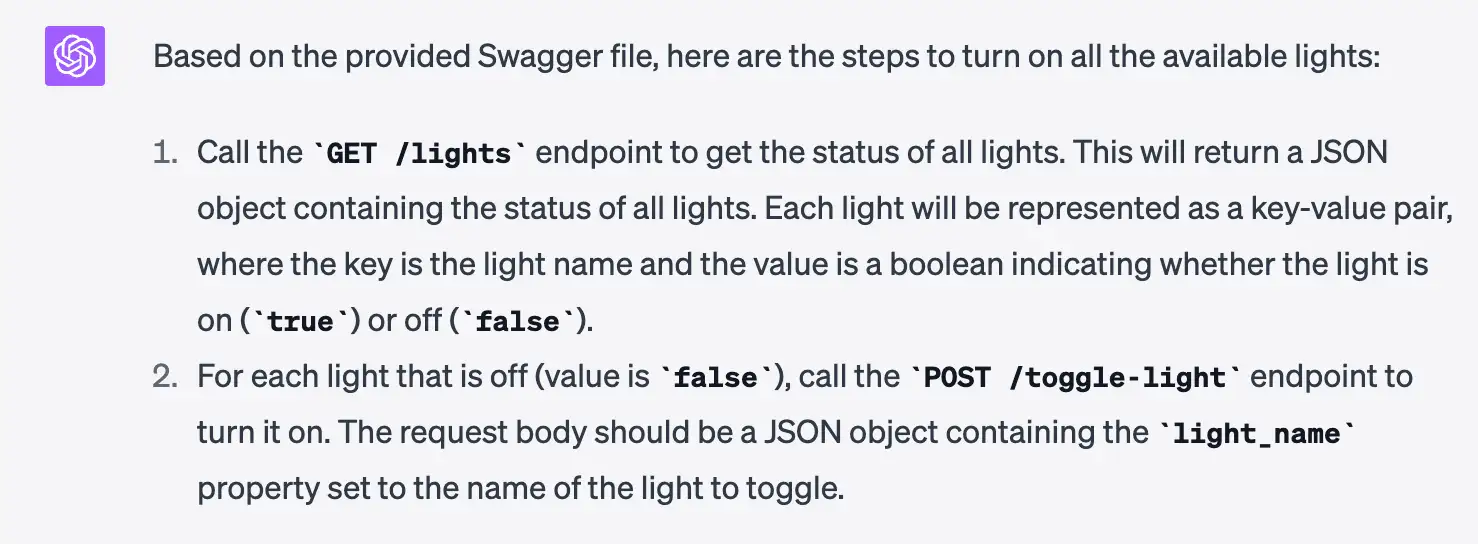

We can get some insight into how it works under the hood. We can just ask ChatGPT to show us its thinking. If we paste in the OpenAPI yaml file and ask it to explain how to turn lights on and off, we get this:

We can even get it to generate a sequence diagram for us:

It’s pretty amazing. Now go and watch the video: